4 Setting Policy and Communicating Expectations

How can AI tools be effectively and responsibly utilized in education?

This chapter explores:

- how institutional policies can promote responsible and ethical use of AI tools;

- how instructional staff can create or co-create course AI policies that enhance learning;

- how AI usage guidelines can be shared and reinforced along with each assignment;

- how learning outcomes should be reconsidered now that powerful AI tools are widely available.

Integrating AI in Education

An attempt to ban students from using AI tools in all aspects of their coursework is not likely to be successful. Moreover, this approach is a disservice to students, who will likely be expected to use AI tools responsibly and effectively in their future workplaces. Dr. Sarah Elaine Eaton of the University of Calgary states that AI tools “can be used ethically for teaching, learning and assessment,” and that student use doesn’t necessarily constitute an academic integrity breach.[1]

AI is here to stay and will be integrated into most aspects of education. Accordingly, institutions, departments, and instructors need to set policy and clarify learning outcomes, and provide students with clear expectations on AI use for every assignment.

Setting Institutional Policy

An October 2023 report from the Canadian Digital Learning Research Association recommends that institutional policies should be developed that promote the “effective, creative, equitable, and responsible use/nonuse” of AI tools and promote staff learning and experimentation around AI tools.[2] See the table below for a selection of AI policy/guideline highlights from Canadian polytechnics.

| Institution | Key Policy Details |

| British Columbia Institute of Technology(BCIT) (see also: BCIT’s Official Position Statement) |

|

| Kwantlen Polytechnic University |

|

| Red River College Polytechnic |

|

| Seneca Polytechnic |

|

Educators and students should check with their institutions for emerging policy and guidance on appropriate use of AI tools. For a roundup of AI policies at educational institutions, visit Higher Education Strategy Associates’ Observatory on AI Policies in Canadian Post-Secondary Education.

Engaging Students on the Topic of AI

Discussion around AI tools should begin early in a course, ideally in the first week when introducing the course outline. This raises student awareness that AI tools are a part of the educational landscape, and positions the instructor as one who is aware of AI’s capabilities and is willing to talk about appropriate usage.

Creating a Course AI Policy

Course AI policies set clear expectations about acceptable and unacceptable uses of AI tools. Policies should clearly state how AI tools can be ethically used for coursework as well as procedures for documenting AI use on assignments. Depending on institutional and departmental protocols, there may be a set policy that instructors need to share with their class, or instructors may be able to set their own or co-create a policy with their students. In any case, instructors must ensure that their course’s AI policy aligns with their institution’s policy on AI use, and are also responsible for following institutional policies regarding consistency across different sections of a course, and across courses within a program.

Several RRC Polytech instructors have reported success in engaging classes in establishing a course AI policy. Two RRC Polytech communication instructors conducted activities in which the classes were divided into groups and asked to draft lists of acceptable and unacceptable uses for AI tools on upcoming assignments. Subsequently, each group shared their lists, and the class collaboratively developed an AI policy for their course. In some cases students set stricter policies than the instructor would have chosen.[3] Indeed, many students want to engage with AI tools but don’t want to miss out on developing critical thinking and other key skills.[4]

Both instructors found that co-creating an AI policy led to an increase in dialogue around AI tools throughout the course and a decrease in AI-based plagiarism compared to the previous term.[5] Research shows that engaging students in collaborative decision making around expectations leads to better uptake of a class policy, a more positive classroom culture, and increased engagement.[6]

See the following text box for an example of a collaboratively drafted AI policy.

Generative AI and COMM-1173

In our Week 2 class meeting on Sept. 8, we worked together to create a policy for use of Generative AI tools like ChatGPT in this course. This policy applies to our section of COMM-1173 only. For other courses, ask your instructor before using AI tools. Students in other sections of COMM-1173 should ask their instructor before using AI tools as part of their course work.

Acceptable uses of AI Tools

- To use it as a tutor/find out more about a topic after completing your course readings.

- In research, to generate a list of resources to explore.

- To generate a list of possible topics for a project.

- To generate an image for a presentation.

- To look for errors in your work (it is never okay for an AI tool to rewrite your work for submission; instead, ask it to generate a list of suggestions for improvement).

If you’d like to use AI tools to help you in a way that isn’t listed here, please contact me.

Unacceptable Uses of AI Tools

- Writing content for your assignment (an essay, paragraph, sentence), or content for you to adapt to put in your assignment.

- Citing the AI tool as a source in a research project.

- Paraphrasing research materials for your assignment.

- Generating ideas for a reflection assignment.

- Rewriting, revising, or reorganizing your work.

Other Considerations

- If you use a generative AI tool to help you with an assignment in any way, you must include a note at the bottom of your document that explains how you used the AI tool. Failing to note the ways that you used AI in your assignment could be considered an Academic Integrity breach.

- Here’s an example of how you can note your AI usage: I used ChatGPT to generate a list of twelve possible topics. I adapted one of these topics for my paper. When I finished writing, I asked ChatGPT to review my work and make suggestions about grammar, punctuation, and organization. It made twenty-four suggestions. I implemented some of these suggestions.

- If you use AI tools to help you with an assignment, save copies of your interactions with the AI tool. ChatGPT will do this automatically as long as you leave the Chat History & Training setting on.

- Make sure that you understand the limitations/weaknesses of any AI tool that you use. This article summarizes the major limitations of ChatGPT.

Setting Clear Expectations on Assignments

Students need to be presented with clear expectations about how AI tools can be used on each assignment. For courses with an established AI policy, students could be asked to revisit the policy once a new assignment is introduced, and report back about how AI tools can and can’t be used. Another approach is to find or create a scale that has multiple levels of AI use, and to indicate which level should be followed for each assignment. Here is one example of an AI Use Scale, adapted from an April 2024 publication by Perkins, Furze, Roe, and MacVaugh by Tyler Steiner, Melissa Deroche, and Carleigh Friesen of the Teaching for Learning in Applied Education program at RRC Polytech.[7]

![An image of a table preceded by an introductory paragraph. The heading reads AI Assessment Scale. The introductory paragraph reads: Across all levels, your critical thinking, analysis, and unique contributions are essential to the learning process. This guide provides clear instructions for using AI in your college work, showing how AI can support your learning while keeping your original ideas and understanding at the forefront. It outlines the different ways you can use AI—from working independently to collaborating with AI—and explains when and how to acknowledge AI’s role. You’re encouraged to use AI as a tool for growth, ensuring that the final product truly reflects your own knowledge and skills. Remember, using unmodified AI content without making it your own is not allowed. The table has three columns. The first row reads: Level, Description, Acknowledgement. The second row, first cell is shaded red and reads Level 1: Independent work. To the right, in the Description column, it reads: You must complete tasks entirely on your own, without AI assistance. This approach allows you to demonstrate and strengthen your individual skills and knowledge. To the right, in the Acknowledgement column, it reads: No acknowledgement required - the work is entirely your own. The third row, first cell is shaded orange and reads Level 2: AI-Assisted Ideation and Refinement. To the right, in the Description column, it reads: You may use AI to generate ideas and improve the structure and quality of your work. The core content remains your own, but AI can assist with brainstorming, refining structure, or checking spelling and grammar. This enhances your final product's quality while developing your skills in evaluating AI suggestions and maintaining originality in your work. To the right, in the Acknowledgement column, it reads: AI was used for idea generation and refinement of this work. [Specify the aspects where AI was used.] The fourth row, first cell is shaded yellow and reads Level 3: AI-Assisted Drafting. To the right, in the Description column, it reads: You can use AI to create initial drafts, which you then critically evaluate and revise. This allows you to use AI to provide a starting point, but you are responsible for reviewing, modifying, and ensuring the originality of the final product. This allows you to integrate AI with your work while developing your critical thinking skills. To the right, in the Acknowledgement column, it reads: AI was used to generate an initial draft, which was then critically revised and adapted for this work. [Explain your process of working with and modifying the AI-generated content.]. The fifth row, first cell is shaded green and reads Level 4: AI Collaboration. To the right, in the Description column, it reads: You may work collaboratively with AI to complete tasks while demonstrating effective and responsible AI use. This level allows you to use AI creatively while critically assessing the content it generates and maintaining ownership of the final product. To the right, in the Acknowledgement column, it reads: AI was used to generate content that was critically evaluated before use in this work. [Describe your collaborative process and how you ensured the final product reflects your knowledge and skills.]](https://pressbooks.openedmb.ca/app/uploads/sites/95/2024/01/2024-11-01-13-27-46-616-Gemoo-Snap.png)

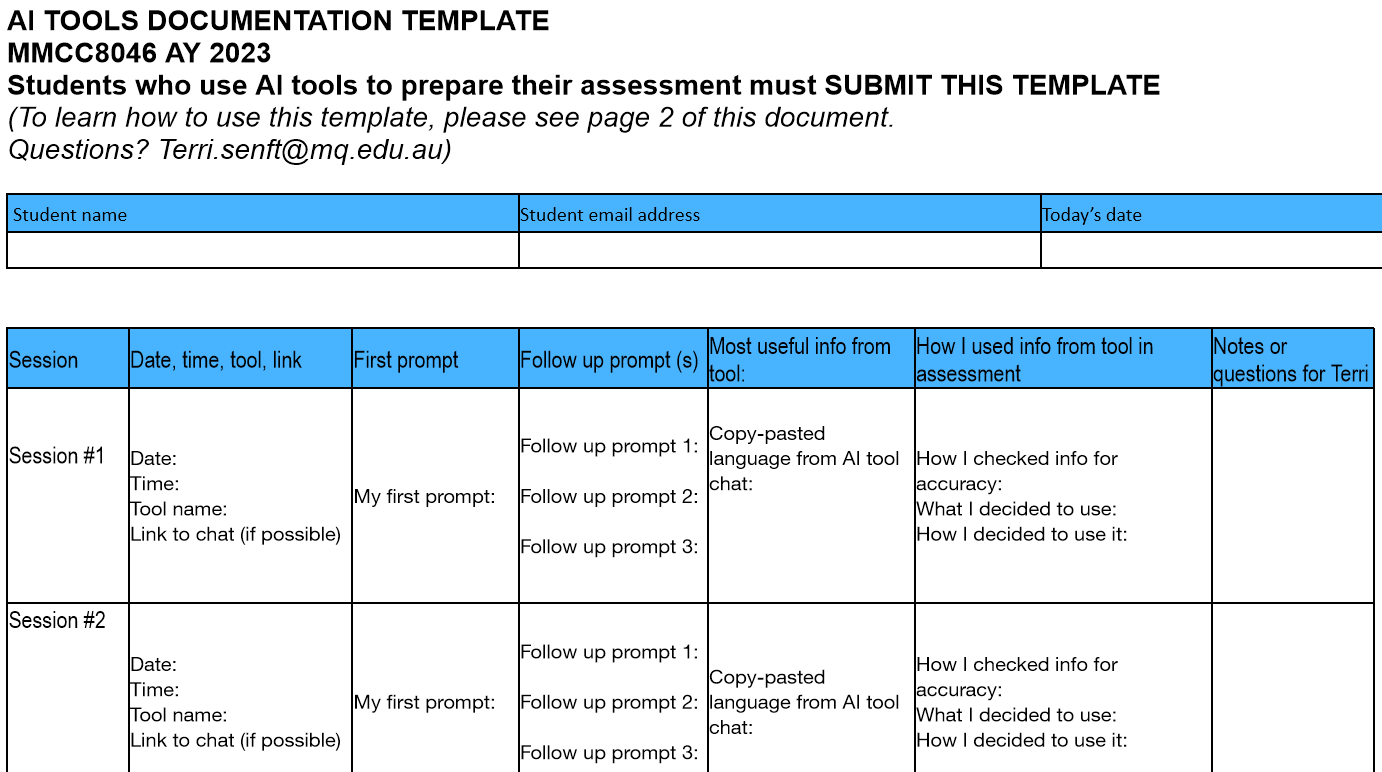

Many course and institutional policies around student use of AI tools require students to disclose the role that AI tools played in creating an assignment. This might involve a simple declaration in the assignment or footnotes that explain how AI was used. Theresa Senft of Macquarie University has required students to submit full documentation of AI usage on assignments, including the prompts used, information gained, and explanations of how that information was used. This task was designed to highlight the importance of documentation in AI usage, considering the numerous examples of AI-generated hallucinations and errors resulting in employment termination.[8] This type of exercise has several other benefits—it gives students an opportunity for feedback on their prompting and AI literacy, promotes critical thinking about how AI outputs are adapted and used, and models responsible use of AI tools.

The image below shows the beginning of Theresa Senft’s AI tools documentation template. Click the image to view the full template.

Clarifying Learning Outcomes

The rapid rise of powerful AI tools has reminded instructors of the need to critically consider how assignments connect to learning outcomes and real-world tasks. It also serves as a reminder to engage in dialogue with students about the learning outcomes for each assignment, why they are important, and how they will prepare students for the workplace. This can be useful alongside each new module or theme and as each assignment is introduced. Carly Schnitzler of Johns Hopkins University suggests exploring the importance of course learning outcomes while crafting a course AI policy, with a provocative discussion question like “What’s the point of being in a writing class if language models can generate passable text and score well on exams?”[9]

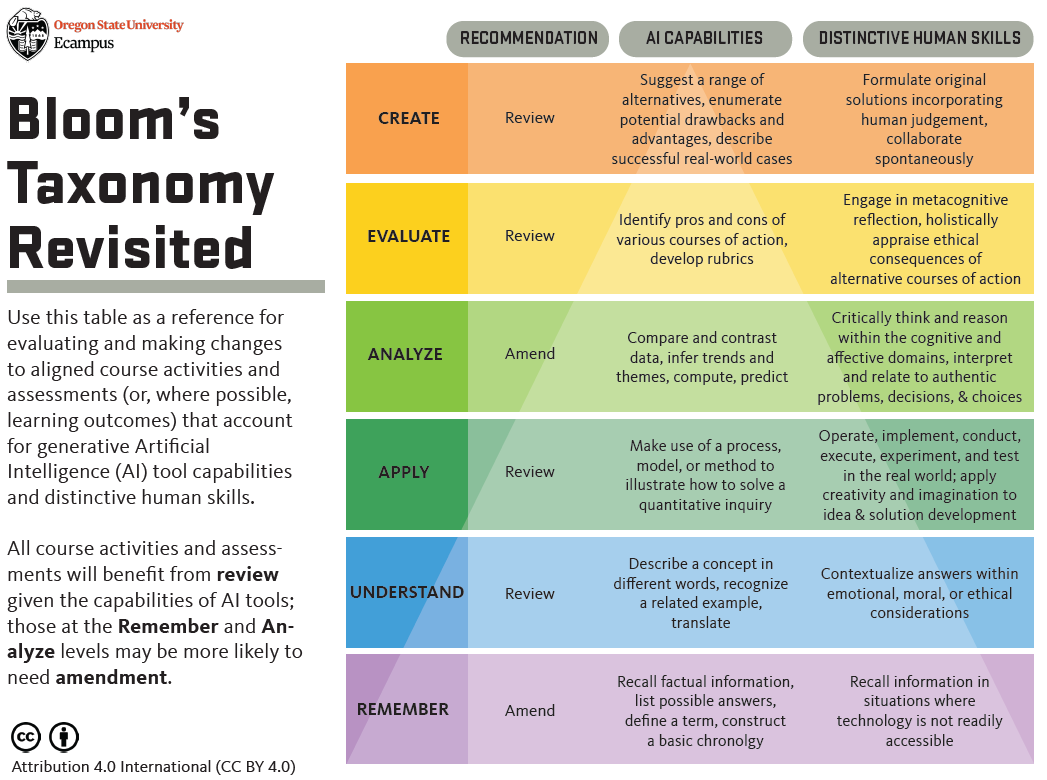

With the power of today’s AI tools, learning outcomes may need to be adjusted to ensure that they reflect skills that students will need in the workforce and that AI tools are not able to reasonably demonstrate. Oregon State University suggests an updated version of Bloom’s Taxonomy, which focuses on “distinctive human skills” that go beyond the capabilities of AI tools.[10]

The following image shows Oregon State University’s suggestions for updating Bloom’s Taxonomy. Click the image for more information, or download a PDF copy of Bloom’s Taxonomy Revisited.

Key Takeaways

- Institutional and course AI policies should be created, which encourage responsible and ethical use of AI tools.

- Co-creating an AI policy with students can lead to greater understanding and uptake of the policy.

- Students need clear guidelines on each assignment about how AI tools can and can’t be used.

- Learning outcomes need to be revised to focus on distinctive human skills.

- Educators need to clarify the importance of course learning outcomes, especially when it seems like an AI tool can complete the task reasonably well.

Exercises

- Investigate your institution’s policy on AI use in teaching and learning.

- Reflect on what a fair and reasonable AI use policy would look like in your courses. Would you engage students in co-creating a policy, and if so, how?

- Draft a plan for communicating AI policies at the beginning of your next course and as each assignment is presented.

- Consider whether any of your courses’ learning outcomes need to be updated. How could you update them to focus on distinctive human skills?

- Sarah Elaine Eaton and Lorelei Anselmo, "Teaching and Learning with Artificial Intelligence Apps," University of Calgary, last modified January 12, 2023, https://taylorinstitute.ucalgary.ca/sites/default/files/teams/1/Resources/AI/Teaching-With-AI-Apps.pdf. ↵

- George Veletsianos, "Generative Artificial Intelligence in Canadian Post-Secondary Education: AI Policies, Possibilities, Realities, and Futures," Canadian Digital Learning Research Association, accessed January 30, 2024, https://www.d2l.com/resources/assets/cdlra-2023-ai-report/. ↵

- Anonymous focus group participants, November 2023. ↵

- Olina Banerji, "How Schools Are Coaching—or Coaxing—Teachers to Use ChatGPT," EdSurge, last modified August 3, 2023, https://www.edsurge.com/news/2023-08-03-how-schools-are-coaching-or-coaxing-teachers-to-use-chatgpt. ↵

- Anonymous focus group participants, November 2023; Anonymous RRC Polytech instructor, February 2024. ↵

- Hope Wilder, "Collaborative Classroom Management," Edutopia, last modified January 19, 2022, https://www.edutopia.org/article/collaborative-classroom-management/. ↵

- The Teaching for Learning in Applied Education team also consulted Elwell's AI Transparency Rubric (https://www.linkedin.com/posts/ryan-elwell-a2224667_aiassessment-aieducation-digitalpedagogies-activity-7234656960761978880-XnoP/), the RRC Polytech GenAI Use Expectations: Course outline/LEARN Sample Statements (internal document), and OpenAI's ChatGPT 4o. ↵

- Theresa Senft, "AI Tools Documentation," Exploring AI Pedagogy—A Community Collection of Teaching Reflections, last modified November 14, 2023, https://exploringaipedagogy.hcommons.org/2023/11/14/developing-ai-standards-of-conduct-as-a-class/. ↵

- Carly Schnitzler, "Developing AI Standards of Conduct as a Class," Exploring AI Pedagogy—A Community Collection of Teaching Reflections, last modified November 14, 2023, https://exploringaipedagogy.hcommons.org/2023/11/14/developing-ai-standards-of-conduct-as-a-class/. ↵

- Oregon State University, "Artificial Intelligence Tools," Online | Ecampus, accessed February 2, 2024, https://ecampus.oregonstate.edu/faculty/artificial-intelligence-tools/meaningful-learning/. ↵