3. Theories of grammar and language acquisition

3.2. Generative grammar

In this textbook, the model that we will be learning together belongs to the family of models called generative grammar. It was Noam Chomsky that came up with the idea that models of grammar should be generative. He defines generative grammar as “a system of rules that in some explicit and well-defined way assigns structural descriptions to sentences” (Chomsky 1965: 8). In other words, a generative grammar uses rules to generate or “build” the structure of sentences. Santorini and Kroch (2007) define it as “an algorithm for specifying, or generating, all and only the grammatical sentences in a language.”

What’s an algorithm? It’s simply any finite, explicit procedure for accomplishing a task, beginning in some initial state and terminating in a defined end state. Computer programs are algorithms, as well as recipes, knitting patterns, the instructions for assembling an Ikea bookcase, or a list of steps for creating a budget.

An important point to keep in mind is that it is often difficult to construct an algorithm for even trivial tasks. A quick way to gain an appreciation for this is to describe how to tie a bow. Like speaking a language, tying a bow is a skill that most of us master around school age and that we perform more or less unconsciously thereafter. But describing (not demonstrating!) how to do it is not that easy, especially if we’re not familiar with the technical terminology of knot-tying. In an analogous way, constructing a generative grammar of English is a completely different task than speaking the language, and much more difficult (or at least difficult in a different way)! Just like a cooking recipe, a generative grammar needs to specify the ingredients and procedures that are necessary for generating grammatical sentences.

Not all models of grammar use a generative framework. In other kinds of grammar models, language is produced by repeating memorized fragments or by probabilistic modelling, which is more similar to how large language models produce language.

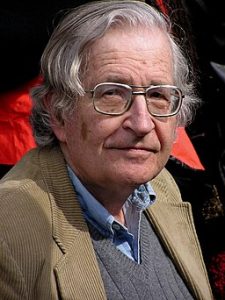

Noam Chomsky

Noam Chomsky (b. 1928) is perhaps the most well-known linguist in the world. Beginning with his 1955 dissertation Transformational Analysis and his 1957 book Syntactic Structures, Chomsky revolutionized the ways we think about language and linguistics and founded the modern field of linguistics.

As we already learned in this section, Chomsky was the first to explicitly model language as a rule-governed system, launching the study of generative grammar. Another foundational proposal by Chomsky was the idea of Universal Grammar, which is the idea that humans are genetically endowed with the capacity for language. We will learn more about Universal Grammar in the remainder of this chapter.

Grammatical theory has changed a lot since 1955, but Chomsky has been a key player throughout this time. Most of the grammatical models that have been developed since then were either developed within a Chomskyan framework, sometimes by Chomsky himself, or in direct opposition to it.

Chomsky continued to publish papers and give talks well into his retirement. He is professor emeritus at the Massachusetts Institute of Technology and a laureate professor at the University of Arizona. Chomsky is also well-known for his political writings and activism.

Grammar complexity

When we model grammar, one of our goals is to make our model as simple as possible while still accounting for all of the data. Our model of grammar should be able to generate all of the sentences that are possible in the language and none of the sentences that are not possible. But when we say our model or our grammar should be simple, what we mean is that the system of rules should be simple—not the output of those rules, the language. The complexity of language is an observable phenomenon that we are trying to model. We want to account for that complexity in the simplest way possible.

Let’s consider the three sentences in (1) and the three grammars in the shaded box.

| (1) | a. | Philip ate a sandwich. |

| b. | Leila saw a cat. | |

| c. | Kareem wants a present. |

All of the sentences in (1) follow a very similar pattern. There are certain kinds of words, such as names like Philip, Leila, and Kareem; verbs like ate, saw, and wants; a determiner a; and nouns like sandwich, cat, and present. You could swap a word from one category with another word of the same category. For example, you could say Philip saw a sandwich or Kareem wants a cat instead. But you can’t swap a word from a different category, like *Saw ate a sandwich or *Leila sandwich a cat. We can represent this pattern with a set of rules.

The three grammars in the shaded box can each be considered a model that represents this pattern. These grammars consist of rules that group words into units and tell you what category of word belongs in each unit, and in what order. For example, Grammar A says that a sentence consists of a name, a verb, and a noun, in that order.

Grammar A

Sentence → Name Verb Noun

Grammar B

Sentence → Name Verb Determiner Noun

Grammar C

Sentence → Subject Predicate

Subject → Name

Predicate → Verb Object

Object → Determiner Noun

Which grammar is the simplest? Grammars A and B have one rule each. Grammar A’s rule has three components, while Grammar B has four components, so Grammar B is slightly more complex. On the other hand, Grammar C has four rules. Grammar C is much more complex! But can all three grammars accurately reflect the complexity of language?

The difference between Grammar A and Grammar B is that Grammar B has an extra component: a determiner. A determiner is a word like a or the. If we look at the sentences in (1), we can see that these sentences do have a determiner. Therefore, even though Grammar A is perhaps slightly simpler, it does not model the fact that we need the word a in these sentences. In other words, it is too simple to explain the patterns we find in our data. Grammar B, on the other hand, can model all of the sentences in (1).

The difference between Grammar B and Grammar C is that Grammar C breaks up the sentence into more units. This makes the grammar even more complex than Grammar B. Grammar B only uses one unit, which it names sentence. Grammar C creates the exact same output, a sentence consisting of a name, verb, determiner, and noun, but breaks the sentence into two sub-units called the subject and the predicate, and it breaks the predicate into two sub-units called the verb and the object. If we do not find evidence for the sub-units in Grammar C, then Grammar B is the better grammar because it is simpler. However, if we do find evidence for the units in Grammar C, then we might find that Grammar B is too simple to explain the complexity of language and that Grammar C is better.

It is also possible to make a grammar with zero rules. In this grammar, you would just list all of the possible sentences of the language. When we’re working with just the three sentences in (1), that might be the simplest grammar. But real languages have more than three sentences!

In fact, we will see later that languages have infinite possible sentences. So, really, the challenge in modelling grammar is using a finite set of rules that is able to generate an infinite set of sentences!

Some useful distinctions

When we are talking about our model of grammar, there are some useful distinctions we should make.

Competence and performance

Sometimes when we produce language, words don’t come out exactly the way we intend. Because of this, we need a distinction between competence and performance. If you have linguistic competence in a language, then you have acquired the grammatical rules necessary to produce the language in question. If you have linguistic performance, the language you produced conforms to the rules of the grammar you are using.

Most of the time, we have both competence and performance. But it is possible to have competence without performance. For example, if you are drunk or sleepy, you are more likely to misspeak. You still have the rules of grammar in your head, so you have competence. But, in this case, you may have trouble accessing or implementing the grammar rules. When the end product of your language use does not conform to the rules of grammar in your head, you do not have performance.

You can also have performance without competence. For example, say you memorize a sentence from a language you don’t speak. You can repeat it, perhaps even flawlessly, so you have linguistic performance. However, you do not have the grammatical rules in your mind necessary to construct that sentence from scratch. When you are only repeating what you have memorized, you don’t have competence in that language.

In generative grammar, our goal is to model the internal rules of language, so we are concerned primarily with competence, not performance. However, other language researchers, such as psycholinguists, are interested in performance.

I-language and E-language

The next distinction we should make is between I-language and E-language. I-language stands for internal language and refers to the system of grammatical rules that an individual language user has in their mind. Everyone has a slightly different I-language. E-language, on the other hand, stands for external language, and refers to how language is externalized, including how it is used in a community. Since everyone has slightly different I-languages and because of the effects of linguistic performance, the E-language might not be consistent. In generative grammar, what we are trying to model is the properties of human I-languages. However, we cannot access I-language directly, since it is a cognitive object. Instead, we infer the properties of I-language from the properties of particular E-languages.

I-language is also sometimes called Language (with a capital L) while E-language can be called language (with a small l).

In our generative model of grammar, our goal is to build a model of I-language, whereas other types of language researchers may be interested in E-language.

Synchronic and diachronic

It is sometimes useful to look at how language changes over time, which is called the diachronic study of language. Although historical linguistics can be very interesting indeed, our model of grammar needs to be a model of language at a particular time, which is called the synchronic study of language. Often, this means studying modern languages, but it can also mean studying a historical language at a particular period.

The history of a language is not encoded in its grammar. Most speakers, unless they have specifically studied it, do not know the history of the languages they speak (and if they have studied it, they very likely did so after their language was acquired as young children). Because the history of the language is not part of what most speakers know, we cannot use a historical explanation in our model of grammar. The historical explanation can be useful for explaining why the grammar has one set of rules and not another, but the rules themselves need to work as a system independent of where they came from. As such, when we are building a model of generative grammar, we are primarily interested in synchronic grammar, but other language researchers, such as historical linguists, are interested in diachronic grammar.

Let’s use riding a bicycle as an analogy. I could know the history of the bicycle, where each piece of metal was mined and smelted, and where the bike was assembled. All of those processes had to happen in order for the bike to exist for you to ride, but that knowledge isn’t necessary to be able to ride the bike. What is necessary is that your bike is properly assembled, with the pedals linked to one of the wheels with a chain. When you pedal your bike, the pedals move the chain, which in turn rotates the wheel and moves the bike forward. This chain reaction between the parts of your bike is kind of like a grammar. The pedals, the chain, and the wheel are the different parts of a system that work together to make your bike work. It doesn’t matter whether the chain on your bike is the original one or has been replaced, it just matters that it is working now.

In the same way, it is not necessary to know the history of your language in order to use the language. When we are trying to explain how our model of Language works, it doesn’t matter if a particular rule was original to the language or borrowed from a different language—it is part of the system now, and we need to explain how it works now.

Key takeaways

- Generative grammar is a particular approach to modelling languages developed by Noam Chomsky that uses rules to generate or build sentences.

- Our goal is to make a model of grammar that is as simple as possible while still accounting for all of the complexity of Language.

- Our model should account for competence (the underlying rules of language that a person has acquired) rather than performance (the language that people produce); for I-language (that internal system of rules) rather than E-language (how language is used in a community); and for synchronic language (how language is used at a particular point of time) rather than diachronic language (how language changes over time).

Check yourself!

References and further resources

Attribution

Portions of this section are adapted from the following CC BY NC source:

↪️ Santorini, Beatrice, and Anthony Kroch. 2007. The syntax of natural language: An online introduction. https://www.ling.upenn.edu/~beatrice/syntax-textbook

For a general audience

Enos, Casey. n.d. Noam Chomsky. Internet encyclopedia of philosophy. https://iep.utm.edu/chomsky-philosophy

Academic sources

Chomsky, Noam. 1955. Transformational analysis. Doctoral dissertation, University of Pennsylvania.

Chomsky, Noam. 1957. Syntactic structures. The Hague: Mouton.

Chomsky, Noam. 1965. Aspects of the theory of syntax. Cambridge, MA: MIT Press.

Any model of grammar that uses rules to generate or "build" a language structure. This model should be able to produce all of the grammatical sentences in the language and no others.

A procedure, process, or system of rules used to solve a problem.

Chomsky's theory that there is an innate, language-specific genetic component underlying the human capacity for language.

Having acquired the rules of grammar in a particular language, regardless of the ability to produce grammatical language in a particular instance.

The production of language that conforms to the rules of grammar of a particular language, regardless of whether the rules in question have been acquired by the language user.

Internal language.

The system of grammatical rules that an individual language user has in their mind.

External language.

The forms of language that are produced by individuals or in a community.

Describing how something (such as language) has changed over time.

Describing something (such as language) at a particular point in time.